Digging Into Data is in the news. The next big idea is data according to a New York Times article, Humanities Scholars Embrace Digital Technology by Patricia Cohen (November 16, 2010.) The article reports on some of the big data interpretation projects like those funded by the Digging Into Data program. The Mining with Criminal Intent project is another Digging project.

New York Times Article on Digging Grant

November 19th, 2010The Old Bailey API – progress report.

October 8th, 2010We now have a demonstrator site for the Old Bailey API that will form the basis for the ‘Newgate Commons’. Thanks to the hard work of Jamie McLaughling at the HRI in Sheffield, the demonstrator is now fully functioning, and we hope to make it available in a more robust version for public use within the couple of months.

As it stands, the demonstrator allows queries on both keywords and phrases, and on structured and tagged data to be generated as either a search URL, or else as a Zip file of the relevant trial texts. The basic interface also allows the user to build a complex query and specify the output format.

The demonstrator also allows the search criteria to be manipulated (‘Drilled’ and ‘Undrilled’), and for the results to be further broken down by specific criteria (‘Broken Down by’).

The demonstrator creates a much improved server-side search and retrieval function that generates a frequency table describing how many of its hits contain specific ‘terms’ (i.e. tagged data from the Proceedings, such as verdict). It is fast and flexible, and will form the basis for swapping either full files, or persistent address information by using a Query URL, with both Zotero and TAPoR tools.

Slices and Ad Hoc Collections

June 28th, 2010In the Center for History and New Media’s study of text mining techniques and their utility for historical work, funded by the National Endowment for the Humanities, we have been trying out different use cases and techniques on focus groups of historians. As part of that process we have settled on two major kinds of collections and related methodologies that we intend to explore further as part of this Digging into Data collaboration: text mining on “slices” of individual corpora that meet certain criteria, and “ad hoc” research libraries assembled from disparate online collections.

The first kind of collection to mine involves a humanities researcher asking for all of the documents from a corpus with particular metadata or full-text keyword matches. The second uses a personal assemblage of individual documents, generally on a common topic, drawn from a wide range of collections. In Zotero, the tool being used for these tests, the former is equivalent to a “saved search” on a collection (i.e., all matching items from a Boolean search of a collection); the latter, the folders that a Zotero user can set up in the interface to organize their library into subsections manually.

CHNM has begun to look at passing both the slices and the ad hoc text corpora to analytical and visualization services. For slices, our assumption has been that Zotero cannot pull down the entirety of the corpus, but will instead pass a token, URI, or query string to reference the text the analytical service needs, which will be then be delivered directly from the collection to the text-mining service. For ad hoc collections, Zotero can bundle the text itself and send it from the client.

For slices, pre-processing of the text by analytical services may have significant advantages, e.g., to establish normalized compression distance and clusters of similarly themed documents. For ad hoc collections, pre-processing of texts is likely less helpful (since the historian has already clustered them for a reason). However, the need for sophisticated text mining services that are more fine-grained, such as word usage or entity extraction, rises. For instance, Zotero recently added a popular mapping service that takes advantage of place-name extraction from full texts within an ad hoc collection.

Criminal Intent project in the news

June 19th, 2010The Globe and Mail, Canada’s “national newspaper”, has an article today in the Focus section on

Supercomputers seek to ‘model humanity’. The article mentions the Digging into Data program and our Criminal Intent project.

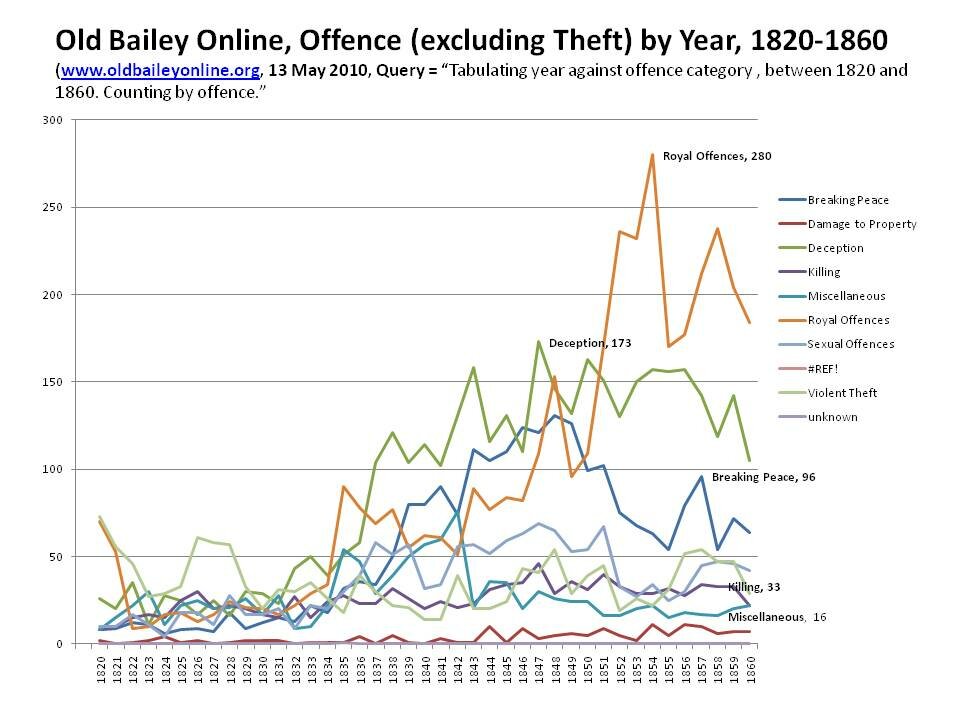

The Old Bailey in Numbers

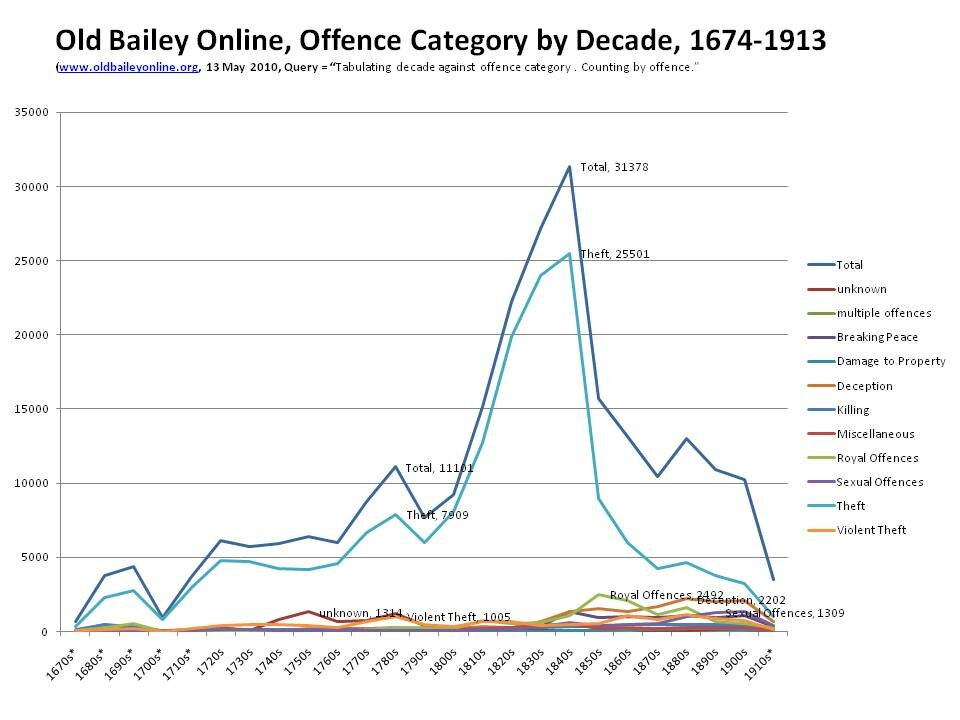

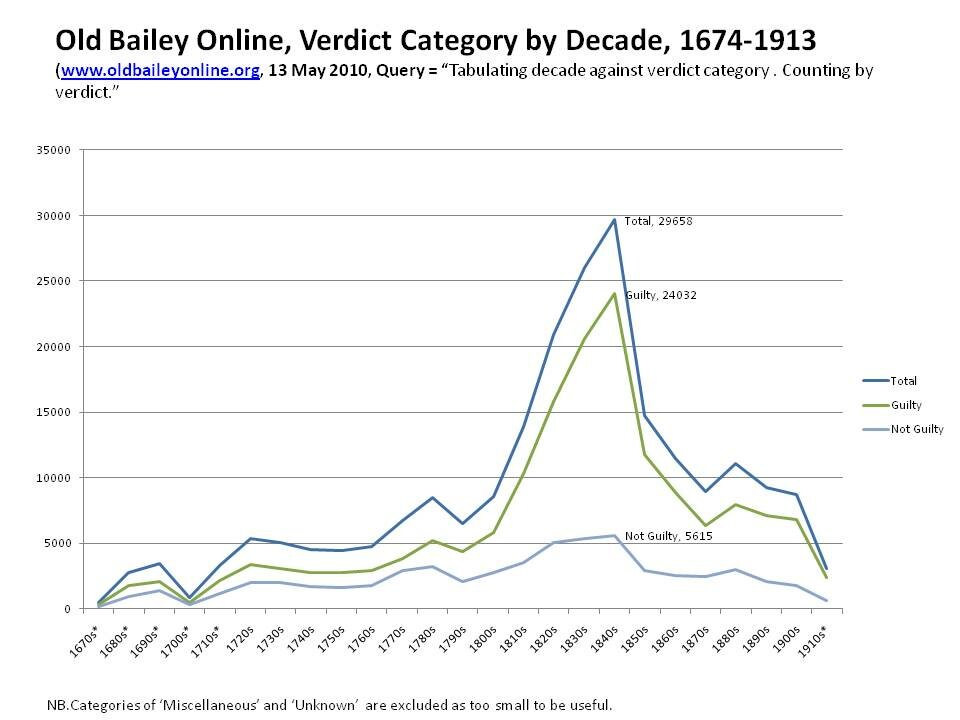

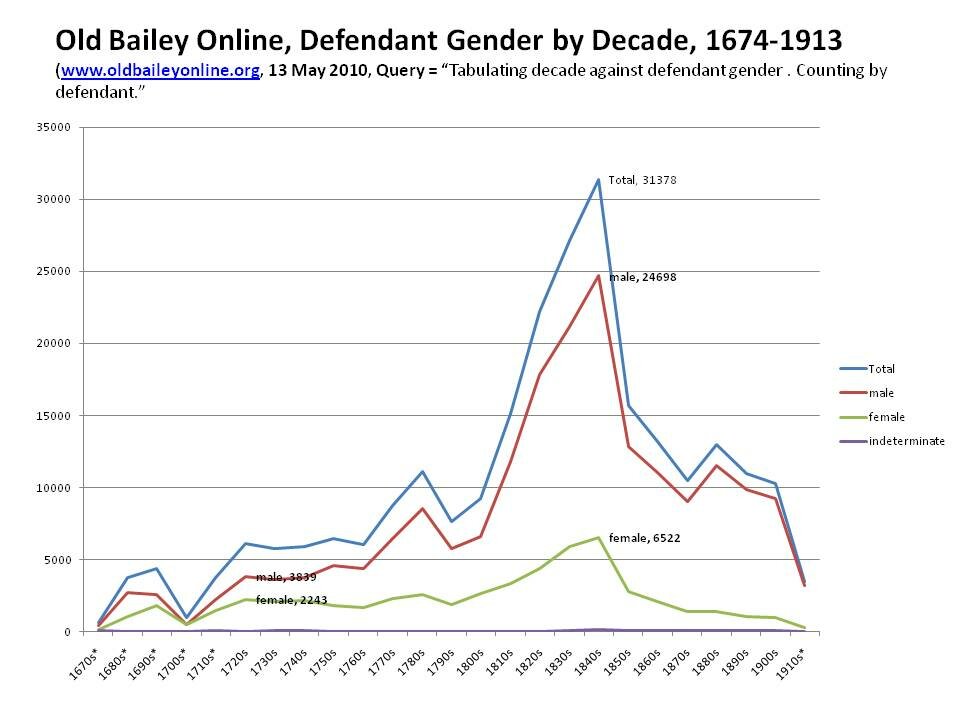

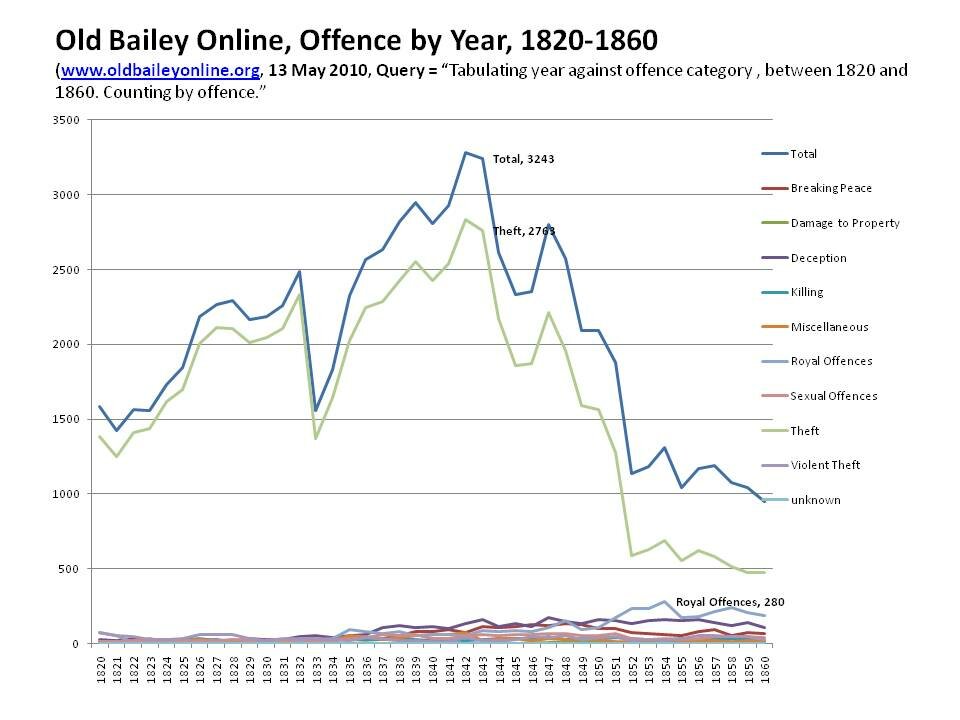

June 18th, 2010Datamining is about discovering patterns in text, but the Old Bailey Proceedings already incorporates tagged data reflecting what contemporaries thought they were doing. The nature of the crime, the name, gender and age of the defendant, the verdict and punishment were described in words their authors thought beyond mis-interpretation. To use datamining to find new patterns, it would help if we could subtract the patterns that we already know about. The huge rise in theft prosecutions in the first half of the nineteenth century, the changing proportion of men and women prosecuted, the evolving nature of the crime itself; each needs to be interrogated to illustrate where changes in language can be explained as the result of changing judicial practise, and where these changes suggest a new and different explanation.

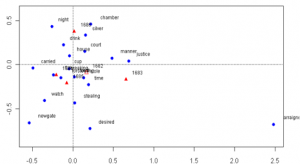

Mind the Gap Results

June 17th, 2010At the Mind the Gap workshop a Criminal Intent team experimented with a number of promising data visualization and mining techniques. For example we tried a “data warehouse” visual comparison model using the Tableau software on data formatted for it. The image above shows how we can compare based on structural information. Here is a visualization using correspondence analysis.

Now we have to select the most promising and develop the ideas.

Mind the Gap Workshop

May 6th, 2010

The Criminal Intent project is one of the teams invited to participate in the Mind the Gap: Bridging the Humanities and High Performance Computing workshop. This workshop will bring together humanities research teams that can use High Performance Computing (HPC) with specialists to explore way of using HPC. The workshop is being organized at the University of Alberta and Criminal Intent participants from the UK and Canada will use this workshop to identify data-mining techniques to implement.

Using Zotero and TAPoR on the Old Bailey Proceedings: Data Mining With Criminal Intent, JISC Offices, London, 22 February 2010.

April 16th, 2010

Datamining with Criminal Intent, 22 February 2010, slide 1

The notes for a presentation to the British partners in the ‘Digging into Data’ challenge delivered by Tim Hitchcock at a meeting held at the JISC offices in London, 22 February 2010:

Datamining with Criminal Intent:

This project combines three distinct elements and services. First, The Old Bailey Online – 120,000,000 words of double rekeyed text. The Largest Corpora of accurately transcribed – rather than OCRd historical text we have. And also one that is extremely heavily tagged in xml for content: gender, role, date, location, crime etc.

Second: Zotero – The citation management plugin of choice. Zotero is an easy-to-use plugin in Firefox, for gathering, organizing, and analyze sources (citations, full texts, web pages, images, and other objects). For this project, Zotero provides the all-important environment in which new tools of visualisation and data mining can be played and applied.

And finally: The TAPoR Portal – a tools set for sophisticated analysis and retrieval, of text, using the methodologies of quantitative linguistics and the Voyeur suite of visualisation tools for the anslys of text.

The methodology involves creating an API for the Old Bailey, that allows sub sets of text to be passed off to Zotero where they can be turned into study collections (either on their own or in combination with sources derived from elsewhere). These study collections will then be passed off to TAPoR and Voyeur for analysis and representation.

The experience of the end user should be seamless, though the processing remains distributed. And the current functionality of the Old Bailey (the search and representation of tagged data, will remain available through Zotero).

The idea is to create intellectual exemplar for the role of data mining in an important historical discipline–the history of crime–and illustrate how the fundamental conundrums of historical research on large bodies of text might be addressed. By allowing the analysis and statistical representation of the types of language used in court and how it changed over time, and by comparing these ‘data mined’ patterns to those found in tagged data “With Criminal Intent” will achieve three things.

First, a whole new way of charting changes in crime reporting and prosecution will be created; second, a new methodology for the consistent discovery of related descriptions will be benchmarked, and finally a working model of how large corpora can be handled online and in a distributed fashion, will be demonstrated.

To take a few mock ups of what the end user will be able to do:

Datamining With Criminal Intent, 22 February 2010, slide 2

In the first instance, the user can simply collect trials and sessions as they want – either through the current search mechanisms, or through a series of new tools that we are creating to sit with the Old Bailey API, which we are calling the Newgate Commons. These can then sit in Zotero, for both analysis, and easy citation, when you eventually come to write up the results.

You can then use this as the basis for analysis using TAPoR Tools:

Datamining With Criminal Intent, 22 February 2010, slide 3

Datamining With Criminal Intent, 22 February 2010, slide 4

Frequency measures, proximity, word distribution graphs can all be invoked, with the metadata embedded within the original text, allowing measures of change over time, and between different types of trials or content to be quickly compared.

Or, through Voyeur, you can apply visualisations including wordle like clouds – which again can be separated out using the basic metadata for cross comparison and analysis.

And finally, the study collections with their metadata will be available for mash-ups.

Datamining With Criminal Intent, 22 February 2010, slide 5

At the moment, while Old Bailey place names are tagged, they aren’t geo-referenced, and this is the next job.

At the end of the day, all we are really doing is bringing together the largest body of historical text we know, with the tools historians are increasingly comfortable with. We will then have a serious go at applying them, and using them to write some history – history of the sort that changes what people think they can do.

Datamining With Criminal Intent, 22 February 2010, slide 6

Voyeur and Old Bailey

April 15th, 2010

Screen shot of Voyeur with Old Bailey data

With Criminal Intent has connected Voyeur with the Old Bailey Online project in a preliminary prototype. Click here to try Voyeur with a subset of the full Old Bailey Corpus.

ComputerWorld Canada story on Criminal Intent project

April 9th, 2010ComputerWorld Canada has published a story on the Data Mining With Criminal Intent project, U of A text mining project could help businesses (Rafael Ruffolo, March 25, 2010 for ComputerWorld Canada.) Canadian participant Geoffrey Rockwell is quoted to the effect that,

“instead of looking for a needle in the haystack, an effective text mining tool will try and show you the shape of the haystack and tell you the words you might want to find.”